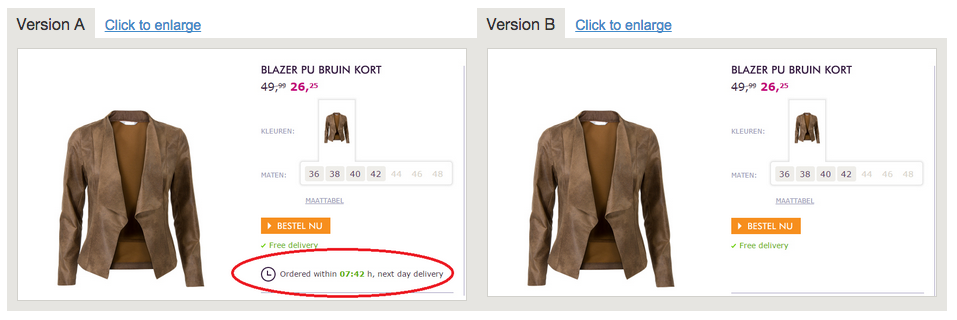

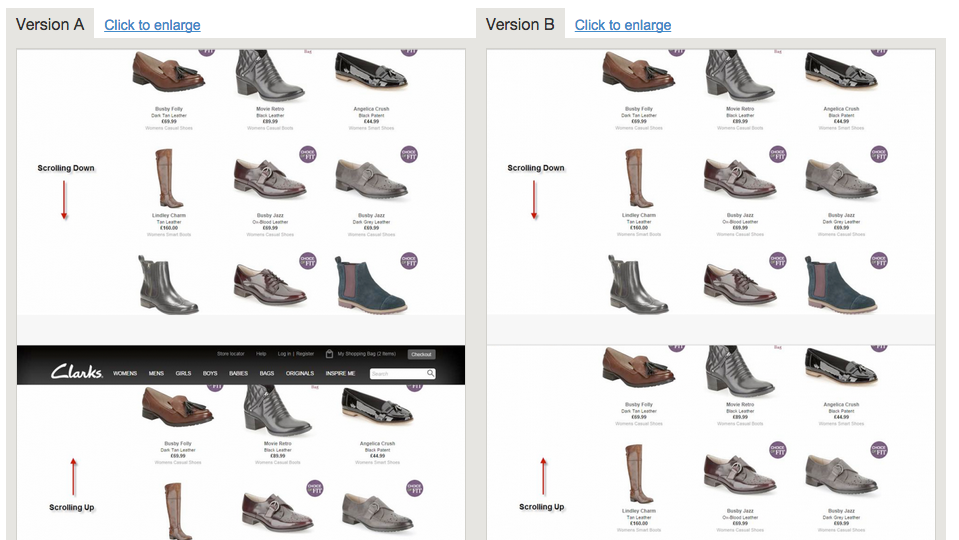

We love the guys over at Which Test Won, who do amazing a/b testing and we have written about them more than once because of how great it is! This test was awesome. The company Extra Storage Space tested two versions for a mobile website, one with a blue call-to-action and the other tested an orange button. The responders were making a reservation and moving into a storage unit.

They really wanted to test the button color for mobile to increase storage rentals. Most of the visits came from a mobile device. Version A had a site with a blue CTA and version B had an orange CTA button.

The Winner?

The blue button was the control. The orange button killed it with a 7.8% increase in reservations!

From Which Test Won:

“Research shows blue tends to be most people’s favorite color. Blue is used on many social media sites and in the financial industry to convey trust and security. “

Research has also found, yellow and orange typically elicit cheerful feelings. For many, yellow is the color of happiness. But, for many Extra Space Storage customers, it may not be a happy time. Again, the color may have been incongruent with visitors’ feelings.”

Something so small can be oh-so-right!